By Sahin Yildiz

Senior Data Engineer

At exmox, we rely heavily on Databricks as our primary data platform for processing, analysis, and collaboration. As data engineers, we often focus on data pipelines, transformations, and orchestration, but managing schema changes across environments can quickly become a bottleneck. Applying DDL (Data Definition Language) changes manually in Databricks, keeping track of what’s been deployed, and ensuring consistency across development and production environments can become error-prone and time-consuming.

Recently, we integrated Liquibase Community into our workflows to automate and version-control our Databricks schema changes. The result feels similar to how Terraform manages infrastructure, but for data objects on Databricks.

_____________________

What is Liquibase?

Liquibase is an open-source database schema change management tool. It tracks, versions, and applies database schema changes in a controlled and automated way. You define your changes as SQL scripts or XML/YAML/JSON changelogs, and Liquibase ensures that each change runs exactly once per environment.

It provides an automated and auditable way to manage DDL changes, making schema evolution transparent and consistent across development, staging, and production.

Installing Liquibase

For this setup, I used Liquibase Community version 4.23.2, which I found to be stable when working with Databricks. Alongside Liquibase itself, I added two necessary libraries:

- liquibase-databricks-1.0.1.jar

- databricks-jdbc-3.0.1.jar

Firstly, we have unzipped the downloaded liquibase zipped object to a folder called liquibase. Then both JAR files were placed under the liquibase/lib folder so Liquibase can connect to Databricks and execute DDL commands (or changelogs as liquibase defines) using the JDBC driver

The reason I used these specific versions is that I initially tested the latest versions of these libraries, Liquibase 5.0.1, but they raised connection and compatibility issues. The versions listed above (Liquibase 4.23.2, Databricks extension 1.0.1, and JDBC 3.0.1) worked reliably in this integration.

How Liquibase Works

Another Liquibase maintains two internal tables to track the history and status of executed changelogs: databasechangelog and databasechangeloglock. You don’t need to create these tables manually, Liquibase automatically creates them during the first execution.

However, Liquibase requires a schema where these tables can be created. This can be configured in the liquibase.*.properties files:

Alternatively, you can specify the schema directly in the Liquibase CLI command:

Each time a changelog (or a SQL file in our case) runs successfully, Liquibase inserts a new record into the databasechangelog table. The FILENAME column in this table effectively acts as a unique identifier, allowing Liquibase to determine which changelogs have already been applied and which ones are pending. This mechanism ensures that only new or modified changelogs are executed, maintaining a consistent and incremental schema evolution process.

Organizing Changelogs and Folder Structure?

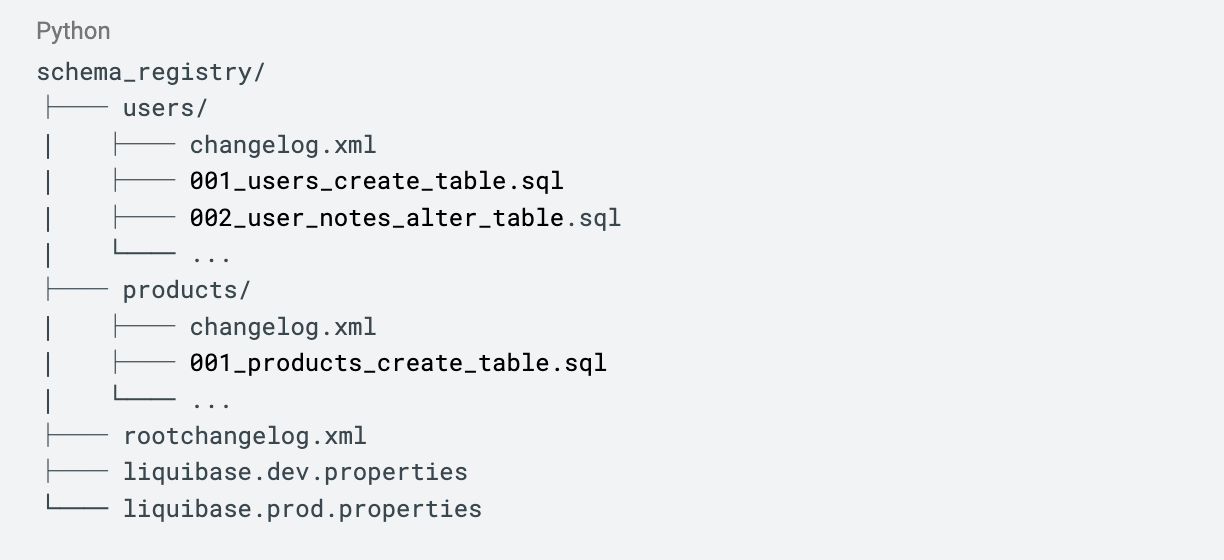

To keep our schema definitions modular and maintainable, we organized the Liquibase changelogs using a clear folder hierarchy that mirrors our Databricks schemas.

The structure looks like this:

Each schema folder (e.g., users, products) contains:

- Individual SQL files with DDL statements (e.g., CREATE TABLE, ALTER TABLE).

- A changelog.xml that lists all SQL files in order.

The master or root changelog (rootchangelog.xml) references all schema-level changelogs, ensuring Liquibase is aware of them.

The liquibase.dev.properties and liquibase.prod.properties files contain environment-specific details such as:

- Liquibase.command.url : Databricks Warehouse JDBC URL, including ConnCatalog parameter

- Liquibase.command.password: Databricks Service Principal Personal Access Token

- Driver: com.databricks.client.jdbc.Drive

CI/CD Integration: GitOps

Once Liquibase was working locally, the next step was to fully automate it through CI/CD pipeline.

We integrated Liquibase into our pipelines so that schema changes are automatically applied on development or production workspace when new merge requests (MRs) are merged into the respective branches following our GitOps approach.

Here’s the high-level flow:

- The developer creates a merge request with new DDL changes (SQL files) and updates the relevant changelogs.

- When the MR is merged into the development branch, the CI/CD pipeline triggers Liquibase using the liquibase.dev.properties file, which ensures JDBC connection to the warehouse in development workspace is used.

- Liquibase checks which changelogs haven’t been applied yet and executes them on the development Databricks workspace.

- Once the development deployment succeeds and everything (ETL codes, Databricks Jobs etc.) runs smoothly on the development workspace, a production deployment is scheduled.

- Same pipeline setup runs Liquibase using liquibase.prod.properties to apply the same changes to the production Databricks workspace.

This workflow guarantees that development and production environments remain consistent and that DDL and ETL changes can be deployed together safely.

Conclusion

To sum up, with Liquibase Open Source, our Databricks DDL changes are now fully version-controlled and automated. Every schema modification is:

- Visible: All DDL scripts live in the code repository

- Reviewable: Schema changes go through the same MR review process as ETL codes.

- Reproducible: Liquibase tracks which changelogs have been applied, ensuring consistency across environments.

- Auditable: We have a clear history of all schema changes tied to Git commits and CI/CD runs.

Most importantly, data engineers no longer need to manually execute DDL statements in Databricks. ETL and DDL changes can now be deployed together in a single automated workflow, reducing human error, ensuring environment consistency, and saving valuable engineering time.

_____________________

Be sure to subscribe to our newsletter to stay up to date with the latest news.